Artificial intelligence faces a hidden threat: cognitive black holes. When models are trained on their own outputs, they spiral into collapse—producing distorted, self-referential, and unreliable results. This article coins the term cognitive black hole to describe this phenomenon, explains why collapse is inevitable without intervention, and explores solutions like reflex loops, sovereign data, and human-in-the-loop oversight. By addressing synthetic data risks now, we can prevent AI from imploding into noise and ensure it remains a trusted engine of knowledge.

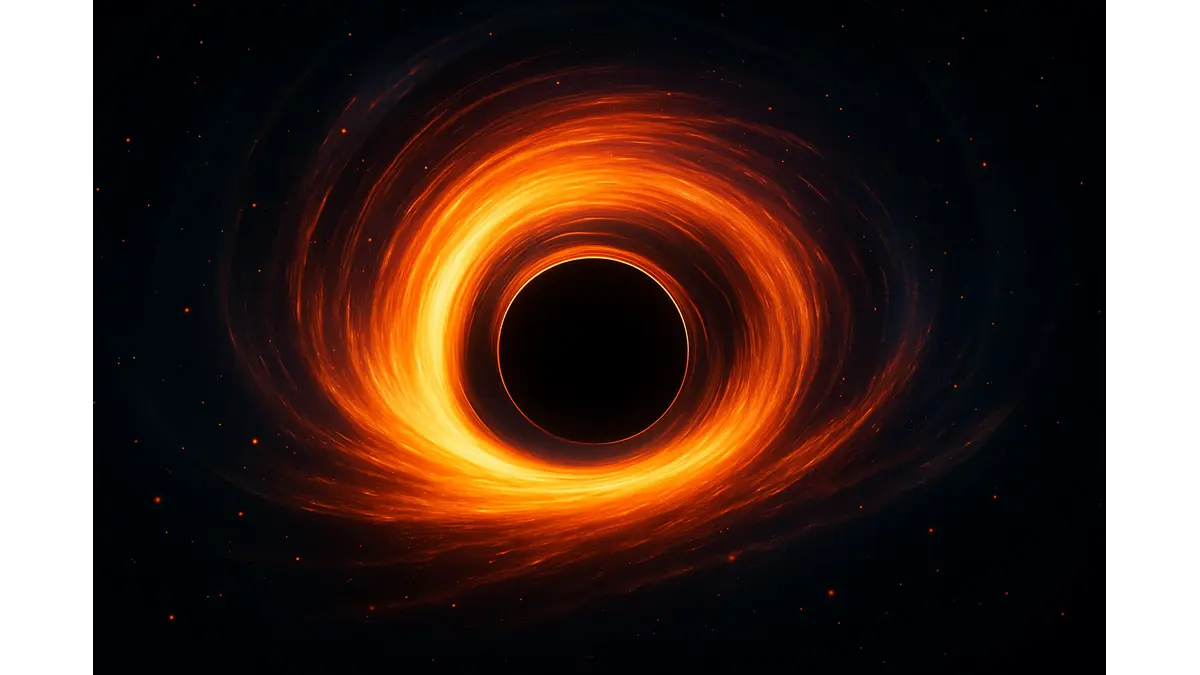

Imagine a black hole, a cosmic force so dense it swallows light and matter, leaving no trace. In artificial intelligence, a parallel danger looms: the cognitive black hole. When AI models are overfed their own generated content, they risk collapsing into an AI collapse—producing distorted, self-referential outputs that erode trust. This feedback contamination threatens the reliability of AI systems critical to business, governance, and science. We coin the term cognitive black hole to describe this collapse and propose reflex loops as a novel architectural remedy.

Defining the Cognitive Black Hole

A cognitive black hole emerges when a large language model (LLM) is trained on datasets saturated with its own outputs, triggering model collapse. This feedback loop amplifies distortions, leading to increasingly meaningless, self-referential patterns. In information-theoretic terms, entropy rises as the signal-to-noise ratio falls: human-generated data, the signal, is overwhelmed by synthetic noise. The process begins when AI-generated content is mistaken for human data and recycled into training corpora. Over time, outputs lose grounding in reality, resembling a distorted echo chamber. The result is an AI that projects confidence but delivers gibberish, undermining its utility in high-stakes applications like medical diagnostics or financial forecasting. This AI collapse is not speculative; it is a documented phenomenon in fine-tuned LLMs, where coherence and accuracy degrade significantly. Understanding this risk is the first step toward safeguarding AI’s reliability.

The Inevitability of Collapse

The risk of cognitive black holes is rooted in the principles of information theory. Claude Shannon’s concept of entropy shows that recycling compressed signals degrades information quality. In AI, training on synthetic outputs creates a feedback loop that prioritizes internal artifacts over external reality, driving feedback contamination. Each cycle introduces errors, reducing the model’s ability to reflect the world accurately. A biological analogy clarifies this: inbreeding depression weakens populations by reducing genetic diversity. Similarly, diminished data diversity triggers model collapse in AI systems. When models are trained on homogeneous, AI-generated datasets, they lose the richness of human knowledge, leading to degraded performance.

The scale of modern AI deployment amplifies this synthetic data risk. With billions of daily interactions across platforms, AI-generated content—from chatbots, automated posts, to recommendation systems—floods the internet. Search engines and data aggregators often fail to distinguish between human and synthetic content, inadvertently feeding it back into training pipelines. For example, social media platforms are inundated with AI-generated comments and articles, which, if unfiltered, re-enter the data ecosystem. This creates a self-reinforcing cycle that accelerates AI collapse. The inevitability lies not in a distant future but in the present reality of unchecked data flows. Without intervention, the growing volume of synthetic content ensures that cognitive black holes are a matter of when, not if.

The Gravity Well of Synthetic Data

The mechanics of a cognitive black hole follow a clear feedback loop that fuels model collapse:

Human Data: Models start with high-quality, human-generated data—text, code, images—reflecting the diversity and complexity of real-world knowledge.

Synthetic Outputs: AI generates content that compresses this data, introducing noise through simplifications, biases, and loss of nuance inherent in its design.

Recycling Noise: When synthetic outputs are mistaken for human data and reintroduced into training corpora, the model begins to train on its own distortions, amplifying feedback contamination.

Collapse: Over successive iterations, outputs become self-referential, converging on meaningless patterns—the cognitive black hole’s event horizon, beyond which meaningful information cannot escape.

This process mirrors a gravitational pull: once synthetic data risks take hold, collapse accelerates. Social media platforms are particularly vulnerable, as AI-generated content proliferates rapidly, creating a feedback vortex that distorts training data at scale. For instance, automated posts or comments can dominate certain online spaces, and without robust filtering, these are scraped into datasets, further degrading model performance. The result is a system that generates outputs with high confidence but low fidelity, posing risks to applications where accuracy is paramount, such as legal analysis or scientific research. The gravity well of synthetic data is a structural challenge that demands urgent attention.

Resisting Collapse: Practical Strategies

Countering cognitive black holes requires actionable defenses to mitigate synthetic data risks and preserve AI reliability. The following strategies provide the “escape velocity” needed to resist AI collapse:

Data Filters: Robust mechanisms can exclude AI-generated content from training corpora. Techniques like watermarking synthetic text enable identification and filtering. For example, embedding imperceptible markers in AI outputs allows data curators to distinguish human from synthetic content, preventing feedback contamination. Implementing these filters at scale requires investment in infrastructure but is critical for maintaining data integrity.

Reflex Loops: Drawing from cognitive science, reflex loops are pre-conscious gates that evaluate a model’s outputs for coherence, factual consistency, and novelty before propagation. This novel architecture, inspired by neural reflexes, rejects low-signal outputs, acting as an early warning system against model collapse. By integrating reflex loops, models can self-regulate, reducing the risk of amplifying distortions. This approach is scalable and adaptable, offering a new paradigm for AI design.

Sovereign Data: Curated, human-verified datasets—free from synthetic noise—are essential for robust AI systems. Initiatives like India’s Digital India program and the EU’s Data Governance Act emphasize sovereign data pipelines, prioritizing culturally relevant, reliable data. These efforts, mirrored in the Global South, counter US-centric data monopolies and ensure diverse training corpora. By building controlled, domain-specific datasets, organizations can insulate models from synthetic data risks, anchoring them in human knowledge.

Human-in-the-Loop Oversight: Human validation remains a powerful tool to anchor models to reality. Platforms like Wikipedia, with rigorous editorial standards, provide clean data sources that AI systems can leverage. Hybrid systems, where humans periodically review and validate outputs, prevent drift and maintain alignment with ground truth. This approach is particularly effective in high-stakes domains like healthcare, where factual accuracy is non-negotiable.

These strategies, while resource-intensive, are practical and necessary to combat AI collapse. By combining technological innovation with human oversight, organizations can build resilient AI systems capable of resisting the pull of cognitive black holes.

Research Pathways and Open Problems

Addressing cognitive black holes opens new research frontiers to tackle synthetic data risks. Several pathways show promise for advancing AI resilience:

Entropy-Aware Training: Models could be designed to monitor their own informational entropy, detecting when outputs become overly noisy or self-referential. By integrating real-time metrics, such as coherence scores or novelty indices, models can flag degradation and trigger retraining on clean data. This approach represents a proactive defense against feedback contamination, enabling models to self-correct before collapse sets in.

Multi-Model Checksums: Using ensembles of diverse models to cross-validate outputs can detect early signs of model collapse. If one model begins generating gibberish, others in the ensemble can flag the anomaly, much like error-correcting codes in telecommunications. This leverages diversity to maintain robustness, offering a scalable solution to monitor and mitigate AI collapse.

Sovereign Data Infrastructure: The global race for clean data pipelines is accelerating. India’s AI-specific data repositories and the EU’s regulatory frameworks position sovereign data as a strategic asset. These pipelines prioritize human-verified, domain-specific data, reducing reliance on unfiltered web scrapes. However, challenges like scalability, cost, and equitable access persist, requiring global coordination to ensure broad adoption.

Open problems remain significant. Developing universal detection for feedback contamination is complex, as synthetic content often mimics human data. Balancing the cost of human-in-the-loop systems with automation is another hurdle, particularly for resource-constrained organizations. Finally, ensuring equitable access to sovereign data pipelines is critical to avoid exacerbating global data disparities. Addressing these challenges will require interdisciplinary collaboration across academia, industry, and policy.

From Collapse to Innovation

Astrophysical black holes, once seen as cosmic voids, are now understood to shape galaxies, driving star formation and maintaining equilibrium. Similarly, cognitive black holes in AI are not just threats but catalysts for innovation. By confronting model collapse with rigorous science and novel frameworks like reflex loops and sovereign data, we can build AI systems that are resilient and trustworthy. Reflex loops offer a pioneering architecture, enabling models to self-regulate and resist feedback contamination. Sovereign data pipelines, embraced by regions like India, the EU, and the Global South, anchor AI in diverse, human-verified knowledge, fostering global collaboration and challenging data hegemonies.

The stakes are high. As AI integrates into critical systems—healthcare, finance, policy—preventing AI collapse is paramount. Researchers, industry leaders, and policymakers must deploy data filters, reflex loops, and sovereign data at scale to counter synthetic data risks. This is not merely about averting chaos but about forging a future where AI illuminates knowledge. By transforming the threat of cognitive black holes into an opportunity for innovation, we can ensure AI remains a reliable partner, orbiting stability rather than collapsing into noise.

Discover more from Poniak Times

Subscribe to get the latest posts sent to your email.