Agentic Reflex Loops are lightweight AI subsystems that handle routine tasks instantly, reducing LLM load and latency. Inspired by human reflexes, they enable fast, scalable, and cost-efficient AI across edge, healthcare, defense, and automation use cases.

Artificial intelligence (AI) is redefining how businesses operate, powering innovations in customer service, supply chain management, real-time analytics, and beyond. Central to this transformation are AI agents—autonomous systems driven by large language models (LLMs) like GPT-5 or Llama—that excel in complex reasoning and decision-making. However, scaling these systems to handle millions of users or devices in real time remains a significant hurdle. Centralized LLMs face challenges in latency, resource consumption, and system integration, limiting their effectiveness in high-volume, time-sensitive scenarios. Agentic reflex loops, inspired by the efficiency of human reflexes, offer a transformative solution: lightweight subsystems that process routine tasks instantly, allowing LLMs to focus on strategic, high-level decisions.

Here we try to explore the scalability limitations of centralized LLMs, the biological inspiration behind reflex loops, and their technical implementation in modern AI systems. We detail their core components, protocol designs, and robust error-handling mechanisms, supported by industry benchmarks and real-world case studies. From India’s sovereign AI initiatives to edge AI applications in automotive, healthcare, and defense, reflex loops are proving their value. By comparing them to existing frameworks like AutoGPT and CrewAI, and proposing an open Reflex Loop Protocol-1 (RLP-1), this article provides business leaders and technologists with actionable strategies to build scalable, resilient, and cost-effective AI systems that drive measurable impact.

The Scalability Bottlenecks of Centralized LLMs

Centralized LLMs, where a single model processes all inputs, have been the backbone of AI advancements, delivering impressive reasoning capabilities. However, they face critical scalability barriers that hinder their performance in enterprise and edge environments:

Latency Constraints: Advanced LLMs like GPT-4 require 0.5 to 5 seconds to process a single query, making them unsuitable for real-time applications such as autonomous vehicles, live customer support, or high-frequency trading systems. This delay disrupts user experience and operational efficiency.

Memory Inefficiency: Large context windows, while powerful, often retrieve irrelevant data, leading to inconsistent outputs when processing massive datasets. This inefficiency becomes a bottleneck in data-intensive industries like logistics or finance.

Integration Challenges: Connecting LLMs to diverse systems—such as databases, APIs, or IoT devices—creates compatibility issues, slowing down workflows and increasing development costs.

Systemic Vulnerabilities: Centralized architectures rely on a single point of coordination, making them prone to system-wide failures if the core model crashes, a significant risk in mission-critical applications.

Data Privacy Risks: Transmitting sensitive data to a central model increases the potential for breaches, a critical concern in regulated sectors like healthcare, finance, and defense.

Resource Intensity: The high computational power and bandwidth required for LLMs make them costly to deploy on edge devices, limiting their use in resource-constrained environments like IoT or mobile applications.

These limitations highlight the need for a decentralized approach. Agentic reflex loops address these challenges by offloading routine tasks to lightweight subsystems, reducing dependency on LLMs while maintaining their oversight for complex decision-making. This hybrid model enhances scalability, reduces operational costs, and ensures reliability across diverse industries.

Learning from Nature: The Power of Human Reflex Arcs

Nature provides a compelling model for scalable AI through human reflex arcs. When you accidentally touch a hot surface, your hand withdraws instantly without conscious thought, thanks to a streamlined neural pathway:

Receptor: Detects the stimulus, such as heat on the skin.

Sensory Neuron: Transmits the signal to the spinal cord.

Interneuron: Processes the signal locally within the spinal cord.

Motor Neuron: Activates a muscle response.

Effector: Executes the action, such as pulling the hand away.

Reflexes, whether somatic (e.g., the knee-jerk response) or autonomic (e.g., pupil dilation in response to light), operate in milliseconds, using minimal neural resources for maximum efficiency. In robotics, artificial reflex arcs enable rapid responses, such as adjusting a robotic arm’s grip strength based on real-time sensor feedback.

Agentic reflex loops emulate this biological efficiency, processing predictable tasks locally to minimize latency and resource demands. By reserving LLMs for strategic reasoning, they create AI systems that are as fast and reliable as nature’s survival mechanisms, making them ideal for real-time, high-volume applications.

What Are Agentic Reflex Loops?

Agentic AI refers to systems that autonomously set goals, make decisions, and execute actions with minimal human intervention. Within these systems, reflex loops are specialized subsystems designed for immediate, condition-based responses, similar to simple reflex agents in AI theory. For example, a chatbot might instantly reply “Hello!” to a user’s greeting without engaging its LLM core.

Reflex loops come in two primary forms:

Simple Reflex: Rule-based responses triggered by current inputs, such as answering FAQs or triggering alerts based on predefined conditions.

Model-Based Reflex: Context-aware responses that incorporate internal states, like user history or sensor data, for adaptive outputs.

By combining these fast-acting reflex layers with slower, deliberative LLM processes, hybrid AI architectures achieve a balance of speed and intelligence. This approach is particularly valuable in edge computing, where low latency is critical, such as in IoT devices or autonomous systems.

How Agentic Reflex Loops Operate

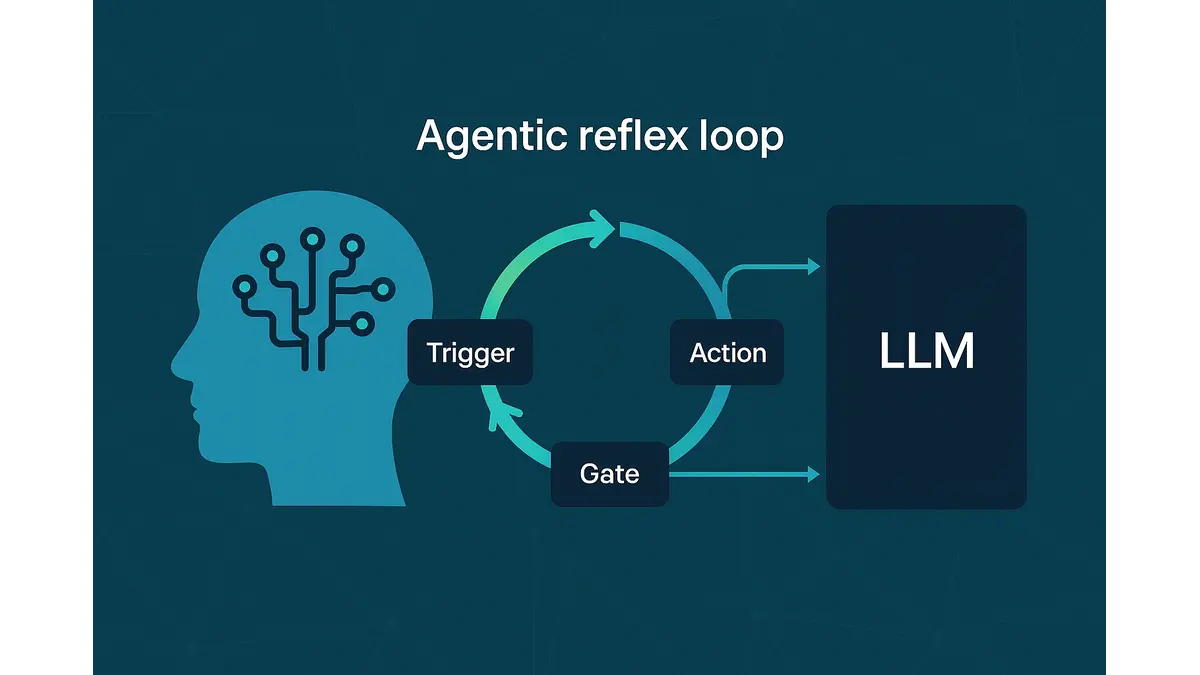

An AI agent with a reflex subsystem follows a clear, efficient workflow to ensure rapid and reliable performance:

Input Detection: The agent captures data from sensors (e.g., temperature readings from IoT devices) or APIs (e.g., user queries in a customer service platform). Inputs are routed to the reflex subsystem first.

Trigger Activation: The trigger identifies relevant stimuli based on predefined conditions, such as “temperature exceeds 80°C” or “user input contains ‘support.’”

Gate Evaluation: The gate applies rules (e.g., “if greeting detected, respond ‘Hello!’”) or lightweight machine learning models (e.g., classifying inputs as urgent) to determine if the reflex can handle the task.

Memory Check: The memory buffer provides context, such as past user interactions or sensor readings, enabling model-based reflexes to deliver tailored responses.

Action Execution: If approved by the gate, the reflex subsystem generates an immediate output, such as sending an alert or replying to a user. Complex inputs are escalated to the LLM core.

Feedback Loop: In learning-based setups, a reward estimator evaluates outcomes (e.g., user satisfaction or energy savings) and updates rules or models to improve future responses.

Escalation Handling: For complex queries, the LLM processes the input using its reasoning capabilities, potentially refining the reflex subsystem’s rules for future efficiency.

For example, in a smart warehouse, a reflex loop adjusts conveyor belt speeds based on sensor data, while unusual patterns—such as a sudden spike in demand—trigger LLM analysis. This ensures fast, reliable operations with minimal resource use.

Core Components of Reflex Loops

Reflex loops are built on four interconnected components that work together to deliver speed and adaptability:

Trigger: Detects and filters inputs, focusing on relevant stimuli like motion in a security system or a user command like “book a flight.”

Gate: Applies condition-action rules or lightweight models to decide whether to act or escalate, ensuring efficient task allocation.

Memory: Stores historical data, such as past sensor readings or customer preferences, enabling context-aware reflexes for personalized responses.

Reward Estimator: In learning-based loops, evaluates outcomes using reinforcement signals (e.g., energy saved by a smart thermostat) to optimize future actions.

These components form a cohesive unit, enabling rapid responses while supporting adaptability. In a logistics system, for instance, the trigger identifies a delivery request, the gate checks inventory rules, the memory recalls past orders, and the reward estimator optimizes routing paths—all in milliseconds.

Designing Robust Protocols for Reflex Loops

Reliable reflex loops depend on well-defined protocols tailored to specific business needs:

Rules-Based Protocols: Use explicit if-then logic (e.g., “if stock falls below 10 units, reorder”) for predictable, transparent tasks like regulatory compliance or financial audits. These are ideal for industries requiring auditability.

Learning-Based Protocols: Leverage machine learning to adapt to data patterns, making them suitable for dynamic tasks like demand forecasting or fraud detection. However, they require significant data and computational resources.

Hybrid Protocols: Combine the reliability of rules-based systems with the adaptability of learning-based systems, such as fixed safety checks paired with adaptive traffic predictions in logistics.

Each protocol type addresses specific use cases. Rules-based protocols excel in regulated environments, learning-based protocols thrive in dynamic settings, and hybrid protocols offer a balanced approach for complex workflows.

Ensuring Reliability with Robust Error Handling

Reliability is critical for reflex loops, and robust error-handling mechanisms ensure consistent performance:

Retries: Reflex loops reattempt failed actions, such as rechecking sensor data for accuracy, to avoid disruptions.

Validation: Schema checks verify input integrity, such as ensuring JSON formats in API calls are correct, preventing processing errors.

Escalation: Unresolvable issues are escalated to the LLM core, with detailed logs capturing context for efficient debugging.

Feedback: Callbacks update rules or models based on errors, enabling continuous improvement of the reflex subsystem.

For example, a customer service chatbot retries parsing a malformed user input, validates its structure, escalates to the LLM if necessary, and logs issues to refine its reflex rules. This layered approach ensures resilience and adaptability in real-world deployments.

Reflex Loops vs. Existing AI Frameworks

Compared to current agentic frameworks, reflex loops offer distinct advantages in scalability and efficiency:

AutoGPT: Generates autonomous plans using GPT-4 but struggles with error-prone self-feedback loops, particularly in complex, multi-step workflows. Reflex loops could handle routine tasks like data validation, reducing errors and LLM dependency.

CrewAI: Orchestrates multi-agent collaboration but relies on LLMs for all decisions, leading to latency and integration bottlenecks. Reflex layers would enable faster coordination for routine agent interactions.

By integrating reflex loops, these frameworks could achieve lower latency and higher reliability, processing routine tasks locally while reserving LLMs for strategic decisions. For instance, AutoGPT could use reflexes to validate inputs before planning, while CrewAI could reflexively assign tasks among agents, streamlining workflows.

Performance Benchmarks: The Reflex Advantage

Industry benchmarks underscore the performance benefits of reflex loops:

Pure LLMs: Average 0.5–5 seconds per query, inadequate for real-time applications like autonomous driving or live chatbots.

Reflex Subsystems: Achieve sub-0.5-second responses, with edge AI systems reaching 100ms for sensor-based triggers.

Hybrid Systems: Reduce overall latency by 50–70%, as demonstrated in automotive and IoT deployments.

For example, a reflex-equipped chatbot responds to greetings in 200ms, compared to 2 seconds for LLM-only systems. In edge AI, reflex loops enable sub-100ms obstacle detection in autonomous vehicles, critical for safety. These metrics, drawn from industry studies, confirm reflex loops’ superiority in real-time scenarios.

Real-World Applications Transforming Industries

Reflex loops are delivering tangible value across diverse sectors, showcasing their versatility and impact:

Automotive Edge AI: Reflex subsystems enable instant obstacle detection and braking in autonomous vehicles, achieving sub-100ms responses critical for passenger safety. For instance, a reflex loop processes lidar data to trigger emergency stops, enhancing reliability.

Healthcare: Wearable devices use reflexes for real-time diagnostics, such as detecting irregular heartbeats from ECG data and alerting users instantly. A smartwatch, for example, flags anomalies for medical review, improving patient outcomes.

Defense: Secure reflex loops power autonomous drones, processing sensor data locally for low-latency navigation and threat detection. A drone might reflexively adjust its flight path to avoid obstacles, ensuring mission success.

- Industrial & Logistics Automation: In warehouses or factory lines, reflex loops dynamically adjust operations (e.g., conveyor belt speeds) in response to sensor data, reducing downtime.

These applications demonstrate reflex loops’ ability to deliver fast, reliable, and cost-effective performance in high-stakes environments, making them a strategic asset for businesses.

The Future of Reflex Loops: Innovation on the Horizon

Ongoing research points to exciting advancements in reflex loop technology, grounded in current studies:

Reflex Evolution with Genetic Algorithms: Simulated evolution optimizes reflex behaviors, improving efficiency in tasks like resource allocation or scheduling. Genetic algorithms iteratively refine rule sets or machine learning models, enhancing performance without human intervention.

Agent-to-Agent Reflex Synchronization (A2A Reflex Mesh): Centralized or distributed protocols enable coordinated reflexes across multi-agent systems, such as swarm robotics or collaborative AI in smart grids. Synchronization ensures seamless interaction, like agents sharing real-time data for collective decision-making.

These advancements promise to enhance the scalability and interoperability of reflex loops, paving the way for more complex, collaborative AI systems that drive business innovation.

A Strategic Imperative for Scalable AI

Agentic reflex loops bridge biological efficiency and technological precision, addressing the scalability limitations of centralized LLMs. By delegating routine tasks to lightweight, decentralized subsystems, they deliver faster, more reliable, and cost-effective AI solutions. From India’s sovereign AI initiatives to edge applications in automotive, healthcare, and defense, reflex loops are proving their value as a cornerstone of resilient, real-time AI architectures.

For business leaders and technologists, adopting reflex loops offers a strategic advantage in a competitive landscape. They enable organizations to optimize operations, reduce costs, and deliver seamless user experiences in high-volume, time-sensitive environments. As AI adoption accelerates, reflex loops will play a pivotal role in shaping the future of intelligent systems, empowering businesses to achieve excellence through scalable, innovative AI solutions.

Author’s Note:

This framework is a new perspective developed through our ongoing work at Poniak Labs. While it draws inspiration from biology and existing AI systems, the idea of Agentic Reflex Loops as described here is an original synthesis intended to spark discussion and innovation in scalable AI design.

Discover more from Poniak Times

Subscribe to get the latest posts sent to your email.