Entropy-aware training leverages information theory to regulate uncertainty in AI. By monitoring and minimizing entropy, models become more stable, generalizable, and trustworthy. Verified use cases in GCNs, RL, and UAVs highlight its value, while future applications in LLMs and AGI show its transformative potential.

Artificial Intelligence (AI) systems have reshaped industries, from healthcare to finance, by enabling advanced data processing and decision-making. Yet, as AI models grow in complexity, ensuring their reliability, efficiency, and ethical alignment remains a challenge. A key issue is managing uncertainty in AI training and inference processes. Entropy-aware training offers a promising solution, leveraging information theory to create self-regulating AI systems that are robust, efficient, and trustworthy. By incorporating entropy—a measure of uncertainty or randomness—into training, AI systems can achieve enhanced stability, coherence, and adaptability, addressing critical challenges in modern AI deployment.

This article explores entropy-aware training, its theoretical foundations, verified applications, and emerging possibilities. We emphasize factual implementations while clearly labeling visionary ideas, providing a clear, engaging, and authoritative resource for business leaders, technologists, and policymakers. Our goal is to deliver high-value content optimized for clarity and credibility.

Understanding Entropy in AI

What is Entropy?

Entropy, originating in thermodynamics and information theory, quantifies disorder or uncertainty in a system. In AI, entropy measures unpredictability in datasets, model outputs, or decision-making. For example, a dataset with evenly distributed class labels (e.g., equal positive and negative outcomes) has high entropy, indicating uncertainty. A dataset dominated by one class has low entropy, reflecting predictability.

In machine learning, entropy underpins algorithms like decision trees, where it guides feature selection to reduce uncertainty. Information Gain, derived from entropy, helps decision trees identify optimal data splits for improved accuracy. Entropy provides a framework for evaluating and refining AI models by measuring their order versus disorder.

The Role of Entropy in AI Training

Complex AI systems, such as deep learning models, often function as “black boxes,” producing outputs that are hard to interpret or predict consistently. High entropy can lead to unreliable predictions, poor generalization, and diminished trust. For instance, when large language models (LLMs) are trained on self-generated data, they risk model collapse, where entropy increases as the model diverges from the original data distribution, degrading performance.

Entropy-aware training mitigates these issues by incorporating entropy as a core metric during training. By promoting low-entropy states—where models focus on meaningful patterns rather than noise—it enhances coherence, stability, and efficiency. This aligns with the principle that learning creates order from disorder, as noted in AI entropy research.

The Principles of Entropy-Aware Training

Core Concepts

Entropy-aware training designs AI systems to monitor and regulate entropy levels during training and inference. Key strategies include:

Entropy Minimization: Optimizing models to reduce uncertainty in predictions, focusing on relevant patterns. Techniques like regularization (e.g., L1/L2) and data preprocessing eliminate noise and redundancy.

Dynamic Entropy Regulation: Adjusting model behavior in real-time based on entropy measurements. This prevents issues like attention collapse (overly focused attention) or diffusion (overly spread attention) in transformer-based models.

Self-Regulation Through Feedback Loops: Incorporating feedback mechanisms for models to self-correct based on entropy levels, particularly in semi-supervised or reinforcement learning settings with evolving data.

Balancing Exploration and Exploitation: In reinforcement learning (RL), maintaining optimal initial entropy ensures effective exploration. Low entropy can cause learning failures, while high entropy may lead to inefficiency. Entropy thresholds balance these dynamics.

Theoretical Foundations

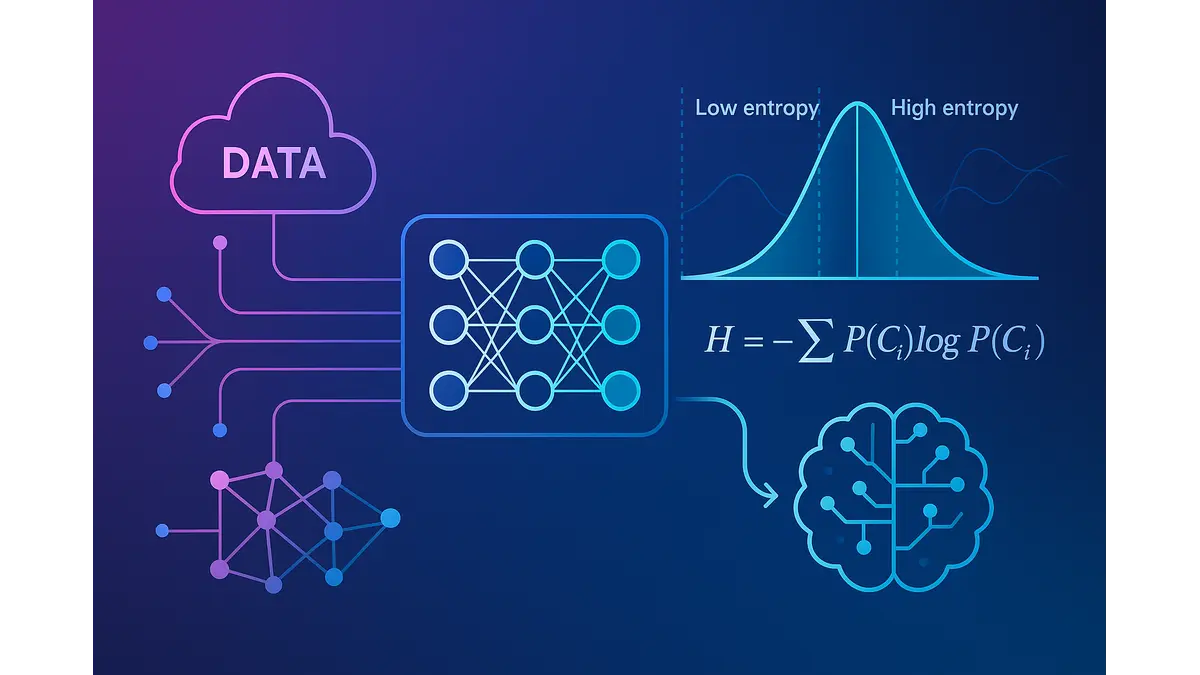

Entropy-aware training is grounded in information theory, particularly Shannon’s entropy, which quantifies uncertainty in a probability distribution. For a dataset with classes C1,C2,…,Cn, entropy H is defined as:

where P(Ci) is the probability of class Ci

High entropy → high uncertainty (model unsure, outputs spread evenly).

Low entropy → predictability (model confident, outputs concentrated).

Minimizing entropy during training encourages models to focus on meaningful patterns, ensuring confident and accurate predictions.

Alongside entropy, Kullback-Leibler (KL) Divergence measures the difference between two probability distributions:

where P is the model’s predicted distribution and Q is the true data distribution. Minimizing KL Divergence ensures alignment between model predictions and reality, enhancing generalization and trustworthiness.

Practical Implementations of Entropy-Aware Training

Graph Convolutional Networks (GCNs)

Graph convolutional networks, used in node classification and recommendation systems, benefit from entropy-aware training. The Entropy-Aware Self-Training Graph Convolutional Network (ES-GCN) framework, proposed by Nazari et al. (arXiv:2307.16182), integrates an entropy-aggregation layer to enhance reasoning. This layer prioritizes nodes with high prediction certainty using entropy-based random walk theory, improving accuracy in semi-supervised settings. Experiments on datasets like Cora and CiteSeer confirm ES-GCN’s superior performance over traditional GCNs by reducing entropy and enhancing reliability.

Reinforcement Learning (RL)

In deep reinforcement learning, entropy-aware training improves exploration efficiency. A 2022 study by Jang et al. (PubMed ID: 35797129) introduced entropy-aware model initialization, where models are initialized to exceed a predefined entropy threshold. This mitigates learning failures in tasks with discrete action spaces, such as drone control or medical imaging, by ensuring effective exploration before policy convergence.

Unmanned Aerial Vehicles (UAVs)

Entropy-aware training enhances UAV performance in dynamic environments. A 2025 study (MDPI, DOI: 10.3390/drones9010027) demonstrated that adaptive entropy control during training improves UAV navigation and decision-making. By maintaining optimal entropy levels, UAVs balance exploration and exploitation, ensuring robust performance in tasks like path planning.

Proposed Applications in Large Language Models (LLMs)

Entropy-guided attention mechanisms are an emerging research direction for LLMs, particularly for improving efficiency and privacy. While no peer-reviewed implementations from institutions like NYU have been widely documented, researchers hypothesize that regulating attention entropy could prevent degenerate patterns, such as near one-hot attention (low entropy) or uniformly diffuse attention (high entropy). This approach could simplify models and enhance privacy by reducing reliance on complex nonlinear operations, though it remains a proposed concept requiring further validation.

Cybersecurity Applications

The Artificial Intelligence Driven Entropy (AIDE) model applies entropy-aware training in cybersecurity. AIDE enhances cryptographic systems by increasing randomness, strengthening defenses against cyber threats. Its Threat Entropy Index (TEI) quantifies malicious behavior likelihood, enabling dynamic security prioritization. This complements traditional controls like CAPTCHA, adding an adaptive, entropy-based layer of protection.

Benefits of Entropy-Aware Training

Enhanced Model Stability

Entropy-aware training prevents high-entropy states like model collapse or overfitting, ensuring consistent and reliable outputs. This is critical for applications in finance, healthcare, and autonomous systems where errors have significant consequences.

Improved Generalization

By minimizing entropy and aligning predictions with true data distributions, entropy-aware training enhances generalization to unseen data, vital for dynamic environments like financial markets or social media analytics.

Increased Trust and Explainability

High entropy erodes trust in AI, particularly in opaque models. Entropy-aware training fosters predictability and coherence, aligning with Explainable AI (XAI) principles, making decisions more transparent and trustworthy.

Privacy Preservation (Proposed)

Entropy regulation in LLMs could reduce overfitting to specific data points, minimizing data leakage risks in cloud-based applications. While plausible due to entropy regularization’s use in machine learning, this application in LLMs awaits broader validation.

Challenges and Considerations

Computational Complexity

Real-time entropy calculations and dynamic adjustments increase computational overhead. Organizations must balance performance benefits against resource costs, especially for large-scale models.

Data Quality and Bias

Entropy-aware training requires high-quality, representative data to achieve low-entropy states. Biased or incomplete data can reinforce biases, necessitating regular data auditing.

Scalability

While effective in GCNs and RL, scaling entropy-aware training to larger models or real-time applications remains challenging. Future research must address efficient implementation in distributed systems.

Real-World Applications

Healthcare

Entropy-aware training enhances diagnostic models by ensuring low-entropy, high-confidence predictions. Entropy-aware GCNs improve medical imaging classification, aiding accurate detection of conditions like cancer.

Finance

In financial services, entropy-aware training supports fraud detection and risk assessment by adapting to evolving threats, ensuring reliable predictions in dynamic markets.

Autonomous Systems

Entropy-aware training is critical for autonomous systems like UAVs, where optimal entropy levels ensure safe and efficient navigation in complex environments.

Cybersecurity

The AIDE model leverages entropy-aware training to enhance cryptographic systems, providing robust protection against cyber threats in industries like banking and telecommunications.

Future Directions

Toward a Unified Entropy-Aware Framework

A unified entropy-aware training framework spanning GCNs, RL, LLMs, and other domains is a visionary goal. The conceptual Entropy-to-Coherence Alignment Framework (ECAF) proposes embedding human-guided coherence into AI architectures, transforming randomness into meaningful outputs. While not yet realized, ECAF offers a roadmap for advancing Artificial General Intelligence (AGI) and Human-AI Collaboration, aligning AI with human values. This remains a speculative direction requiring extensive research.

Scalability and Real-Time Applications

Advancements in distributed computing and edge AI could enable entropy-aware training in real-time, resource-constrained environments. For example, entropy-aware distributed GNNs could optimize performance in large-scale applications like social network analysis or supply chain management.

Entropy-aware training is a transformative approach to building self-regulating AI systems that are stable, efficient, and trustworthy. Verified applications in GCNs, RL, and cybersecurity demonstrate its value, while proposed extensions to LLMs highlight its potential. By leveraging entropy from information theory, it addresses challenges in reliability, generalization, and privacy. For organizations, adopting entropy-aware training is a strategic step toward AI systems that perform exceptionally and align with ethical goals. As research advances, entropy-aware training could redefine AI development, fostering systems that earn global trust and confidence.

Discover more from Poniak Times

Subscribe to get the latest posts sent to your email.