Digital twins, when combined with predictive analytics, are redefining how businesses simulate, monitor, and optimize real-world systems—in real time and at scale.

Digital twins, virtual replicas of physical systems synchronized in real-time, are transforming industries by merging IoT, AI, and cloud computing into one dynamic ecosystem. When paired with predictive analytics, these technologies offer businesses the power to simulate, anticipate, and optimize complex operations. With market projections approaching $250 billion by 2032 and adoption accelerating across manufacturing, healthcare, and urban infrastructure, this article explores the underlying architecture, use cases, key players, and real-world impact of digital twins and predictive intelligence.

The Transformative Potential of Digital Twins and Predictive Analytics

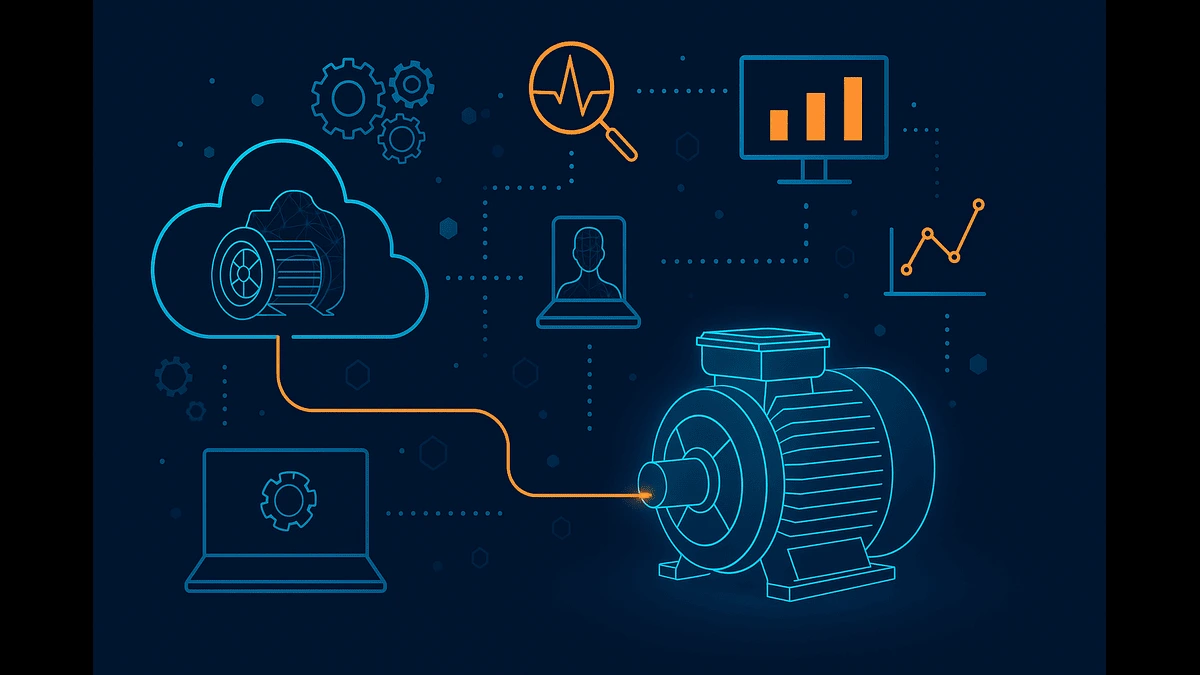

Digital twins—sophisticated virtual models of real-world assets, processes, or systems—are fast becoming foundational to digital transformation efforts. Leveraging a combination of IoT sensors, edge computing, cloud infrastructure, and AI-driven analytics, digital twins provide organizations with a real-time, interactive mirror of their operations. This enables not only monitoring and diagnostics, but also advanced simulation and prescriptive decision-making.

When enhanced with predictive analytics, which uses machine learning (ML) models to forecast future states based on historical and real-time data, digital twins become not just reactive but proactive. They evolve into intelligent systems capable of predicting breakdowns, optimizing resource use, and improving strategic outcomes.

According to Grand View Research, the global digital twin market reached $24.97 billion in 2024 and is projected to exceed $250 billion by 2032, growing at a CAGR of approximately 39.8%. Key drivers include increasing industrial IoT adoption, growing investment in AI platforms, and demand for real-time data-driven optimization. Industry leaders like Siemens, NVIDIA, Microsoft, and GE are actively shaping this landscape.

Technical Architecture: Building a Digital Twin Ecosystem

Constructing a digital twin system involves multiple layers of hardware and software, each playing a crucial role in collecting, transmitting, processing, and visualizing data.

- IoT Sensors Embedded in physical assets such as engines, medical devices, or manufacturing equipment, sensors capture live data points—temperature, pressure, vibration, humidity, and more. These inputs serve as the sensory nerves of the system.

- Edge Computing Edge devices, such as NVIDIA Jetson Nano or Raspberry Pi 4, handle preliminary data processing close to the source. This reduces latency and bandwidth usage, ensuring faster and more reliable data flows.

- Connectivity Protocols Protocols like MQTT (Message Queuing Telemetry Transport) and OPC UA facilitate lightweight, real-time communication between edge devices and cloud platforms.

- Cloud Platforms Data is transferred to scalable cloud environments like AWS IoT Core, Azure IoT Hub, or Google Cloud IoT, which manage storage, computation, and secure device connectivity.

- AI/ML Engines Machine learning models—built using frameworks such as TensorFlow, PyTorch, or Scikit-learn—analyze streaming and historical data to detect anomalies, predict failures, and recommend optimizations.

- Visualization Tools Dashboards powered by tools like Grafana, Power BI, or Tableau provide intuitive visual representations of digital twin behavior, KPIs, and alerts, helping decision-makers interact with insights in real-time.

Data Pipeline and Control Loop

A typical digital twin data flow looks like this:

- Sensor Layer: Captures environmental and operational data.

- Edge Layer: Filters noise and aggregates data.

- Cloud Layer: Performs in-depth analysis, model training, and secure data archival.

- Twin Simulation Layer: Updates virtual models using real-time inputs.

- Visualization and Control Layer: Displays predictions and enables human or automated interventions.

The loop is continuous: simulations influence decisions, decisions trigger actions, and actions generate new data.

Predictive Analytics: Intelligence at the Core

Predictive analytics serves as the cognitive engine powering digital twins, enabling systems to anticipate future events, detect anomalies, and make data-driven recommendations. While the digital twin mirrors the present, predictive analytics extends the mirror into the future. This section expands on the core models often used in such systems, providing a detailed look at their mechanics, benefits, and industry applications in simple, understandable terms.

Long Short-Term Memory (LSTM)

Imagine you’re trying to predict tomorrow’s weather. You wouldn’t just look at today—you’d look at patterns over the past few days. LSTM works similarly for machines. It learns from past sequences to predict what might happen next.

Technically, LSTM is a type of neural network designed to work with sequences—like a series of temperature readings over time. It remembers important patterns from the past and forgets the irrelevant ones using special gates, much like your brain knows when to remember something important and when to ignore background noise.

- Ideal Use Case: Predicting values that change over time, such as heat, pressure, or vibration.

- Strengths: Learns from history, handles complex patterns, great for time-based predictions.

- Limitation: Needs a lot of data and computing power.

- Example in Use: Siemens uses LSTM in factory machines to forecast overheating or motor failure before it happens.

Python Code Example: LSTM for Predictive Maintenance

# Predicting future machine temperature from past sensor data

import numpy as np

import pandas as pd

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense

from sklearn.preprocessing import MinMaxScaler# Load sensor data

data = pd.read_csv(‘sensor_data.csv’)# Scale the data

scaler = MinMaxScaler()

data_scaled = scaler.fit_transform(data[[‘temperature’, ‘vibration’]])# Create sequences for time series prediction

def create_sequences(data, seq_length):

X, y = [], []

for i in range(len(data) – seq_length):

X.append(data[i:i + seq_length])

y.append(data[i + seq_length, 0]) # Predict temperature

return np.array(X), np.array(y)X, y = create_sequences(data_scaled, seq_length=10)

# Build the LSTM model

model = Sequential([

LSTM(50, activation=’relu’, return_sequences=True, input_shape=(10, 2)),

LSTM(50, activation=’relu’),

Dense(1)

])

model.compile(optimizer=’adam’, loss=’mse’)

model.fit(X, y, epochs=20, batch_size=32)# Predict the next temperature value

latest_sequence = data_scaled[-10:].reshape((1, 10, 2))

prediction = model.predict(latest_sequence)

predicted_temp = scaler.inverse_transform([[prediction[0][0], 0]])[0][0]print(f”Predicted Temperature: {predicted_temp:.2f}°C”)

Diagram: LSTM Architecture

A[Input Sequence] –> B[LSTM Layer 1] –> C[LSTM Layer 2] –> D[Dense Layer (Output)] –> E[Predicted Value]

This architecture can be visualized like a layered brain for machines. The input layer feeds time-series data (like temperature and vibration over time) into the LSTM layers, which act as memory filters that decide what historical trends to remember. These filtered insights then pass into the dense layer that compacts them into a single numeric prediction—such as the machine’s future temperature. The final output represents a forecasted value, helping industries preemptively act on maintenance needs before breakdowns occur.

Isolation Forest

Think of operation engineers in a factory watching for anything strange. Isolation Forests are good at spotting odd behavior—like a machine suddenly vibrating more than usual.

They do this by building decision trees and checking how quickly something gets isolated. The faster it’s spotted as different, the more likely it’s an anomaly.

- Ideal Use Case: Spotting weird sensor readings that could mean failure.

- Strengths: Fast, works well on big data, good at catching surprises.

- Limitation: May miss subtle or overlapping anomalies.

- Example in Use: Used in oil plants to catch pressure leaks before they cause damage.

Python Code Example: Isolation Forest for Anomaly Detection

from sklearn.ensemble import IsolationForest

import pandas as pddata = pd.read_csv(‘sensor_data.csv’)

features = data[[‘temperature’, ‘vibration’]]model = IsolationForest(contamination=0.05)

model.fit(features)data[‘anomaly’] = model.predict(features)

print(data[data[‘anomaly’] == -1])Diagram: Isolation Forest Workflow

A[Sensor Data] –> B[Feature Subsampling] –> C[Random Tree Construction] –> D[Path Length Computation] –> E[Isolation Score] –> F[Anomaly Detection]

This workflow shows how Isolation Forest detects outliers. Sensor data is randomly split into decision trees. If a data point gets separated from the rest early in the tree structure, it’s likely an anomaly. The fewer steps needed to isolate a point, the more “strange” it is considered. The algorithm computes an isolation score for each observation and flags those with the highest anomaly scores for review. It’s like letting multiple guards independently scan the data and vote on anything suspicious.

Random Forest and XGBoost

These are like having a committee of decision-makers rather than one person. Each tree in the forest gives an opinion, and the group decides together.

- Random Forest grows many trees and averages their answers.

- XGBoost improves accuracy by fixing mistakes from previous trees.

- Ideal Use Case: Deciding what caused a machine failure, or how long it’ll work.

- Strengths: High accuracy, handles complex data, doesn’t overfit easily.

- Limitation: Can be a bit of a black box, needs well-prepared data.

- Example in Use: Used in car factories to predict part wear-out time.

Diagram: Ensemble Tree Model (Random Forest/XGBoost)

A[Input Features] –> B1[Tree 1] –> B2[Tree 2] –> B3[Tree 3] –>C[Prediction 1] –> D[Prediction 2] –> E[Prediction 3]

C & D & E –> F[Final Decision]

This ensemble method aggregates multiple decision trees trained on different parts of the data. Each tree gives its own prediction, and the final result is based on the majority vote or average. Random Forests train independently, while XGBoost improves one tree at a time by correcting the previous tree’s errors. It’s like consulting multiple experts, then averaging their advice for a more reliable answer.

Reinforcement Learning (RL)

Imagine teaching a robot to walk by giving it a thumbs-up when it does well and nothing when it fails. That’s reinforcement learning.

It learns by doing—trying things out, seeing what works, and adjusting to do better next time. It’s used when conditions keep changing and the system has to adapt.

- Ideal Use Case: Smart buildings adjusting heating/cooling based on time of day and weather.

- Strengths: Learns over time, handles change, finds efficient solutions.

- Limitation: Needs lots of time or simulation to learn.

- Example in Use: Google DeepMind used RL to cut cooling costs in data centers.

Diagram: Reinforcement Learning Loop

A[Agent] –> B[Environment] –> C[Observation + Reward] –> A

In reinforcement learning, an agent (e.g., a control system) interacts with its environment (e.g., a smart building). Based on the feedback (rewards or penalties), it learns which actions lead to the best outcomes. Over time, it refines its strategy to maximize total rewards. This makes it ideal for dynamic and adaptive systems where continuous learning is crucial.

Use Case Example: Siemens reportedly applies LSTM models within its Xcelerator platform to predict overheating in rotating equipment, contributing to a 30% reduction in unplanned downtime.

Data preprocessing involves outlier removal, normalization (often between 0 and 1), and transformation into supervised learning format—especially important for LSTM and sequence models.

Code Example: LSTM-Based Predictive Maintenance Model

import numpy as np

import pandas as pd

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import LSTM, Dense

from sklearn.preprocessing import MinMaxScaler# Load and scale data

data = pd.read_csv(‘sensor_data.csv’)

scaler = MinMaxScaler()

scaled = scaler.fit_transform(data[[‘temperature’, ‘vibration’]])# Sequence creation

def create_sequences(data, seq_len):

X, y = [], []

for i in range(len(data) – seq_len):

X.append(data[i:i+seq_len])

y.append(data[i+seq_len, 0])

return np.array(X), np.array(y)X, y = create_sequences(scaled, seq_len=10)

# Build LSTM model

model = Sequential([

LSTM(64, activation=’relu’, return_sequences=True, input_shape=(10, 2)),

LSTM(32, activation=’relu’),

Dense(1)

])

model.compile(optimizer=’adam’, loss=’mse’)

model.fit(X, y, epochs=30, batch_size=32, validation_split=0.2)# Predict temperature

latest_seq = scaled[-10:].reshape(1, 10, 2)

pred = model.predict(latest_seq)

temp = scaler.inverse_transform([[pred[0][0], 0]])[0][0]

print(f”Predicted Temperature: {temp:.2f}°C”)

This example simulates a real-world factory machine predicting future temperature based on the last 10 data points, enabling proactive maintenance.

Real-World Applications Across Industries

Digital twins have transitioned from theoretical constructs to operational cornerstones in various sectors. Their integration into real-world workflows is reshaping asset management, product development, infrastructure optimization, and patient care. Below is a detailed, fact-based exploration of how digital twins are actively used across major industries, based on publicly verifiable use cases and enterprise deployments.

Manufacturing: Precision, Uptime, and Quality Optimization

Digital twins are widely adopted in both discrete and process manufacturing to monitor equipment health, simulate production line changes, and predict failures. According to a 2023 Capgemini Research Institute report, over 65% of manufacturers globally are either implementing or piloting digital twin solutions.

Siemens, a leader in this space, leverages its Xcelerator platform to create comprehensive digital replicas of factory lines. These digital twins integrate IoT sensor data with AI and simulation models to:

- Predict tool wear in CNC (Computer Numerical Control) machines.

- Simulate material flow and robotic assembly sequences.

- Reduce changeover time and optimize layouts before implementation.

Case Study: Foxconn and Siemens partnered to deploy digital twins in high-volume electronics manufacturing. The result was a reported 30% improvement in first-time-right production quality and a 25% reduction in line-balancing errors.

Case Study: Daimler Truck and Siemens In 2023, Daimler Truck announced a strategic collaboration with Siemens to build an integrated digital engineering platform across its global manufacturing operations. Powered by Siemens Xcelerator and Teamcenter software, the digital twin platform enables:

- Seamless connection of product design, process planning, and production engineering data.

- Real-time collaboration across Daimler Truck’s global production network.

- Virtual validation of manufacturing processes before deployment on physical lines.

The initiative supports Daimler Truck’s commitment to flexible, sustainable, and scalable truck manufacturing. It enables the company to simulate production scenarios virtually, detect inefficiencies in digital form, and accelerate the implementation of new production technologies. This digital-first approach reduces rework, increases transparency across teams, and supports faster decision-making.

Healthcare: Personalized Medicine and Clinical Decision Support

In healthcare, digital twins are enabling a shift from reactive treatment to predictive and personalized medicine. Patient-specific digital twins are constructed using medical imaging, genomics, biometrics, and wearable IoT data.

Philips, in collaboration with academic hospitals in Europe, has developed cardiac digital twins that model individual patient heart behavior using MRI data, ECG inputs, and physiological modeling. These twins help:

- Simulate disease progression for heart failure and arrhythmias.

- Optimize treatment plans, such as pacemaker placements or surgical strategies.

- Reduce risks of adverse outcomes during surgery.

Case Study: The Cardiovascular Digital Twin pilot at Erasmus MC in the Netherlands showed a 20% improvement in diagnostic precision for patients with complex heart conditions.

Additionally, startups like Twin Health (U.S.) have developed digital twins for metabolic health. Their system creates a dynamic model of a patient’s metabolism using glucose monitors, diet logs, and sleep data to recommend real-time behavioral interventions. In clinical trials, Twin Health’s platform helped reduce HbA1c levels in type-2 diabetic patients without medication escalation.

Urban Planning: Smart Infrastructure and Resource Efficiency

Cities are deploying digital twins to create smart infrastructure that adapts in real-time. These models integrate geographic information systems (GIS), IoT networks, climate data, and transport models to plan and simulate city dynamics.

The City of Jacksonville, in collaboration with the University of Florida and NVIDIA, created a real-time digital twin of its urban core. Key functionalities include:

- Flood simulation under extreme rainfall using hydrodynamic models.

- Traffic flow analysis using live GPS and traffic camera data.

- Energy usage optimization for city buildings through HVAC modeling.

Outcomes: According to the Jacksonville Smart City Program Office (2024), the initiative led to:

- 15% energy consumption reduction across monitored municipal facilities.

- 20% improvement in emergency response time through predictive traffic routing.

- More accurate climate resilience planning and insurance policy adjustments.

Other cities like Singapore, Helsinki, and Shanghai have also implemented national-scale urban digital twins, offering policy makers a simulation sandbox to test zoning, utilities, and transportation outcomes before real-world execution.

Aerospace & Energy: Predictive Maintenance and System Reliability

Digital twins are mission-critical in high-value asset industries like aviation and energy, where downtime costs millions and safety is paramount.

GE Digital uses digital twins to simulate gas turbines, jet engines, and wind turbines. Their twin systems integrate SCADA sensor data, physics-based simulations, and ML models to monitor:

- Blade fatigue and combustor degradation in jet engines.

- Vibration and flow anomalies in power turbines.

- Temperature-pressure correlations in oil & gas pipelines.

Case Study: GE’s twin-powered APM (Asset Performance Management) solution, deployed at Delta Airlines and Petrobras, resulted in:

- A 15% reduction in unplanned maintenance events.

- Up to $12M/year savings in avoided downtime and part replacements.

- Improved inspection accuracy and scheduling efficiency.

In offshore wind farms, Siemens Gamesa uses digital twins to simulate turbine behavior under varying wind conditions, enabling dynamic blade angle optimization. This boosts annual energy production (AEP) by up to 6%, as reported in a 2023 technical paper published with DNV.

Market Leaders and Strategic Investments

As digital twin adoption accelerates, leading technology firms are making substantial investments to dominate the space, while startups and governments are fueling innovation and scaling.

Siemens has committed over $10 billion toward digitalization initiatives since 2020, with a strong focus on integrating AI, simulation, and industrial automation. Its Xcelerator platform combines digital twin modeling, simulation, and real-time analytics, serving sectors ranging from automotive to electronics.

In 2022, Siemens announced a strategic partnership with NVIDIA to integrate Xcelerator with Omniverse, enabling “industrial metaverse” capabilities. These physics-based 3D simulation environments support:

- Immersive product and factory design collaboration in real time.

- Validation of autonomous systems under simulated operational conditions.

- Data fidelity improvements in predictive engineering and logistics planning.

NVIDIA’s Omniverse platform is central to its digital twin strategy. It provides GPU-accelerated, interoperable 3D design environments that are crucial for high-fidelity industrial simulation. Omniverse uses USD (Universal Scene Description) for collaboration across tools like Siemens NX, Autodesk, and Unreal Engine. In 2023, NVIDIA reported over 700 companies using Omniverse Enterprise, with use cases spanning factory layout, warehouse robotics, and virtual commissioning.

Microsoft offers Azure Digital Twins, a platform that enables spatial intelligence graphs representing digital replicas of physical environments. It integrates with Azure Synapse Analytics for big data processing and Azure Machine Learning for AI-driven predictive analytics. Microsoft clients use Azure Digital Twins in sectors like smart buildings, utilities, and supply chain operations.

- Case in Point: Johnson Controls partnered with Microsoft to integrate Azure Digital Twins into its OpenBlue platform, enabling real-time energy optimization in large commercial buildings.

GE Digital is a key player in the energy and aviation sectors. Its Predix and APM (Asset Performance Management) platforms use digital twins to model and monitor turbines, compressors, and jet engines. GE’s twins combine physics-based models with ML to deliver predictive maintenance, reduce downtime, and extend asset life.

IBM, through its Maximo Application Suite, leverages digital twins for operations in aerospace, railways, and utilities. The suite provides AI-powered insights, IoT data integration, and reliability modeling to support asset-intensive industries. IBM is also involved in joint research on trustworthy AI twins with MIT and other academic partners.

Emerging Players: Startups are also pushing innovation:

- MetAI, a U.S.-based startup, raised $4 million in 2025 to build 3D digital twin simulation tools for retail logistics and warehouse optimization.

- Cityzenith, known for its SmartWorldOS platform, is helping cities deploy climate-resilient digital twins.

Government Initiatives: In 2024, the U.S. CHIPS and Science Act allocated over $50 billion for semiconductor R&D and digital infrastructure, with indirect implications for digital twin platforms reliant on high-performance computing. Though no dedicated twin funding was earmarked, agencies such as NIST and DOE have launched pilot initiatives integrating digital twins into smart manufacturing and energy grid resilience studies.

Implementation Strategies for Enterprises

Adopting digital twin technology requires more than procuring tools—it demands a strategic transformation roadmap. Below is a breakdown of practical implementation strategies that organizations across sectors are deploying to derive maximum ROI and operational value from digital twins.

Use Case Definition and Scope Management

Begin with a narrowly scoped, high-impact pilot project. Successful implementations typically focus on a single, critical asset or process—such as predictive maintenance for a turbine or optimization of HVAC systems in a commercial building. This minimizes complexity while demonstrating measurable outcomes to stakeholders.

– Example: Airbus initially deployed digital twins to simulate engine health in specific aircraft fleets before scaling the solution to broader maintenance programs.

Data Infrastructure and Maturity Assessment

The backbone of any digital twin is high-quality data. Enterprises must assess the maturity of their existing sensor networks, data ingestion pipelines, and data governance frameworks. Key aspects include:

Sensor granularity and calibration

Edge computing capabilities

Secure cloud integration (e.g., AWS IoT, Azure IoT Hub)

Historical data availability for training AI models

Cloud and Edge Orchestration

Modern digital twins operate across hybrid environments. Edge devices collect and preprocess data to minimize latency, while cloud infrastructure provides compute power for AI/ML model training and large-scale simulations.

- Strategy Tip: Use containerized microservices to deploy components at the edge or cloud as needed. Kubernetes and Docker are commonly used orchestration tools.

Model Development and Validation

Once data pipelines are operational, enterprises should focus on building, training, and validating predictive models. Open-source frameworks such as TensorFlow, PyTorch, and Scikit-learn are often used to implement algorithms like LSTM, Isolation Forest, or XGBoost.

- Start with pre-trained models where possible, especially for common failure modes.

- Incorporate domain expertise to fine-tune model assumptions and thresholds.

Visualization, Feedback Loops, and Governance

High-performing digital twins rely on interactive dashboards that provide actionable insights to both technical and operational teams. Integrating feedback loops—where model outcomes are reviewed and corrected—improves twin accuracy over time.

- Visualization Tools: Grafana, Power BI, and Tableau are commonly used.

- Governance Needs: Define access controls, data lineage tracking, and audit logs to ensure regulatory compliance and model integrity.

Upskilling, Change Management, and Cultural Adoption

A successful deployment is not just about technology—it also requires people and processes to adapt. Upskilling programs in AI, IoT, and data analytics are crucial to ensure internal capabilities grow with the technology.

- Run workshops for engineers, IT teams, and operations managers.

- Establish digital twin centers of excellence (CoEs) to consolidate knowledge and ensure long-term capability development.

Continuous Monitoring and Scaling

Once a pilot has proven successful, organizations can expand coverage by onboarding additional assets, sites, or business units. Metrics to monitor include:

- Model accuracy and drift

- Asset downtime reductions

- ROI and operational savings

- Scaling Tip: Adopt a federated architecture to allow local customization of twins while maintaining global consistency.

Conclusion: A Strategic Imperative

Digital twins, paired with predictive analytics, offer organizations a strategic lever to enhance operational resilience, reduce costs, and gain foresight into system behaviors. With robust growth forecasts and expanding industry applications, these technologies are not optional innovations but competitive necessities.

Leaders like Siemens, Microsoft, and NVIDIA are setting benchmarks in scalable deployment, while emerging startups push the frontier of simulation and visualization. As enterprises face increasing complexity and demand for sustainability, digital twins stand out as a unifying framework for informed decision-making.

Companies that invest early in this convergence of the physical and digital will not only improve efficiency but also unlock new business models, enabling a future where systems are self-optimizing, predictive, and adaptive by design.

References

1- Grand View Research – Digital Twin Market Size & Forecast

The global digital twin market was valued at US 24.97 billion in 2024 and is projected to reach US 155.84 billion by 2030, with a CAGR of 34.2 %https://www.grandviewresearch.com/industry-analysis/digital-twin-market

2- Fortune Business Insights – Market Outlook through 2032

Forecast indicates growth from US 24.48 billion in 2025 to US 259.32 billion by 2032, achieving approximately 40 % CAGR. fortunebusinessinsights.com+1researchnester.com3- Siemens Press Release – Daimler Truck & Siemens Collaboration

Announced in March 2023: the integration of Siemens Xcelerator and Teamcenter to build a global digital engineering platform for Daimler Truck4- Digital Engineering 24/7 – Industry Report

Coverage of Daimler Truck & Siemens joint initiative highlighting deployment of Teamcenter, NX, and Simcenter toolshttps://www.digitalengineering247.com/article/daimler-truck-collaborates-with-siemens

5- ArXiv – Digital Twin: Enabling Technologies & Challenges (2024)

A rigorous academic survey covering digital twin architecture, IoT integration, and application domainshttps://arxiv.org/abs/2412.00209

6- ArXiv – Modeling & Enabling Technologies Review (2022)

In-depth review of modeling methods and system-enablement techniques for digital twins in Industry 4.0

Glossary of Key Terms

1. Digital Twin

A virtual model of a physical asset, system, or process that is continuously updated with real-time data from sensors and simulations. It allows companies to monitor performance, run what-if scenarios, predict failures, and improve efficiency without touching the real asset.

2. IoT (Internet of Things)

A network of physical devices—like machines, appliances, or wearables—embedded with sensors, software, and internet connectivity. These devices collect and share data, enabling remote monitoring, automation, and real-time decision-making.

3. Edge Computing

Processing data at or near the source (e.g., on the shop floor or inside a machine) rather than sending it all to the cloud. This reduces latency, saves bandwidth, and enables real-time actions, especially in time-critical environments like factories or hospitals.

4. Cloud Platform

Cloud platforms like AWS, Microsoft Azure, and Google Cloud offer the infrastructure to store, process, and analyze large volumes of data. They provide tools for AI, machine learning, security, and device management—forming the digital backbone of enterprise operations.

5. Predictive Analytics

A set of AI and statistical techniques that analyze historical and real-time data to predict future events, such as equipment failure, demand spikes, or patient risk. It helps organizations take action before problems occur, saving costs and improving reliability.

6. Machine Learning (ML)

A form of artificial intelligence where computers learn from data—identifying patterns and making decisions without being explicitly programmed. ML powers everything from predictive maintenance to anomaly detection in digital twin systems.

7. LSTM (Long Short-Term Memory)

A type of neural network designed to understand sequences of data—like temperature changes over time. LSTM models “remember” useful past information to forecast future states, often used in time-series prediction within digital twins.

8. Isolation Forest

An unsupervised machine learning model used for anomaly detection. It isolates data points that behave unusually (e.g., sudden sensor spikes) by splitting them across random decision trees. The quicker something is isolated, the more likely it’s an outlier.

9. Random Forest / XGBoost

These are ensemble learning algorithms that use multiple decision trees to make accurate predictions. They’re widely used in digital twins to forecast outcomes, classify failures, or rank factors contributing to issues.

Random Forest: Builds multiple trees independently and takes the majority vote or average.

XGBoost: Builds trees sequentially, improving errors from previous trees (more accurate, but computationally heavier).

10. Reinforcement Learning (RL)

An AI technique where an agent learns by trial and error, getting rewarded for good decisions and penalized for bad ones. It’s used in dynamic systems like smart buildings or autonomous robots, where conditions keep changing and the system needs to adapt on its own.

11. Federated Architecture

A modular system design where different parts of a digital twin ecosystem (across factories, hospitals, cities, etc.) operate independently but share a common standard or framework. It allows each unit to customize local models while maintaining global consistency. Perfect for scaling digital twins across diverse business units or geographies.

12. Orchestration Tools

Software that helps coordinate and manage multiple components (like microservices, models, databases, or edge devices) in a complex digital ecosystem. Tools like Kubernetes and Docker ensure that AI models, dashboards, and data pipelines are deployed efficiently, scaled on demand, and stay in sync across cloud and edge environments.

Discover more from Poniak Times

Subscribe to get the latest posts sent to your email.